Overview of the Kube Controller Manager in Kubernetes

After reading this post, you will have:

- Understood the responsibilities of the Kube Controller Manager.

"Prerequisite": Overview of the Kubernetes Architecture

If you want to get the most out of this post I would highly recommend my article that briefly overviews the kubernetes architecture. Link Here

Quick Review

As a beginner, understanding the kubernetes architecture can be daunting. To simplify, I am going to use an analogy of ships to explain the architecture of Kubernetes.

We have two kinds of ships in this example:

- Cargo Ships: do the actual work of carrying containers across to sea.

- Control Ships: are responsible for monitoring and managing the cargo ships.

The Kubernetes cluster consists of a set of nodes which may be physical, virtual, on-premise, or on cloud that host applications in the form of containers. These relate to the cargo ships in this analogy.

On the other hand, the control ship, hosts different offices and departments, monitoring equipments, communication equipments, cranes for moving containers between ships etc. The control ship relate to the master node in the kubernetes cluster, which is responsible for managing the cluster. Tasks done by the control ships include:

- Storing information regarding the different nodes.

- Planning which containers goes where.

- Monitoring the nodes as well as the containers on them.

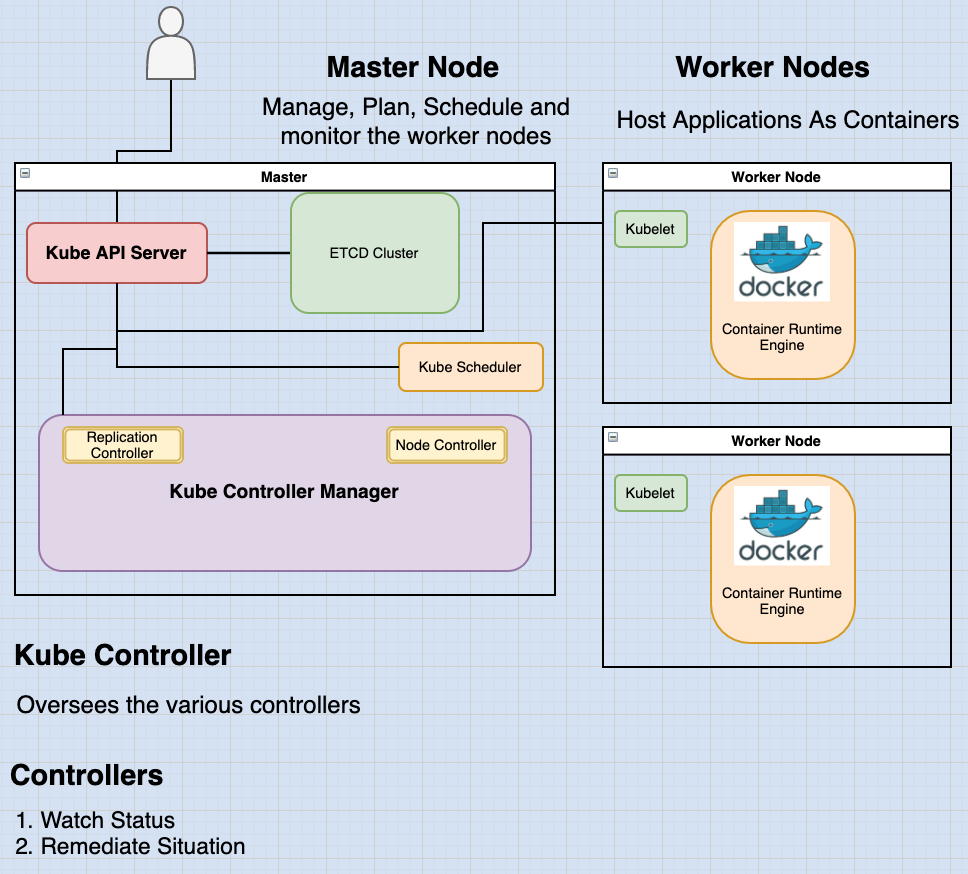

The Kube Controller Manager

As you might have guessed already, the kube controller manager is a "department" in the control ship, meaning that helps in managing the overall cluster.

The kube controller manager oversees various controllers in Kubernetes.

As a reminder, a controller is like an office or department within the master ship that has its own set of responsibilities. Such an office for the Ships would be responsible for:

- Being continuously on the lookout for the status of the cargo ships.

- Take necessary actions to remediate an adverse situation.

In kubernetes terms:

A controller is a process that continuously monitors the state of various components within the system and works towards bringing the whole system to the desired functioning state.

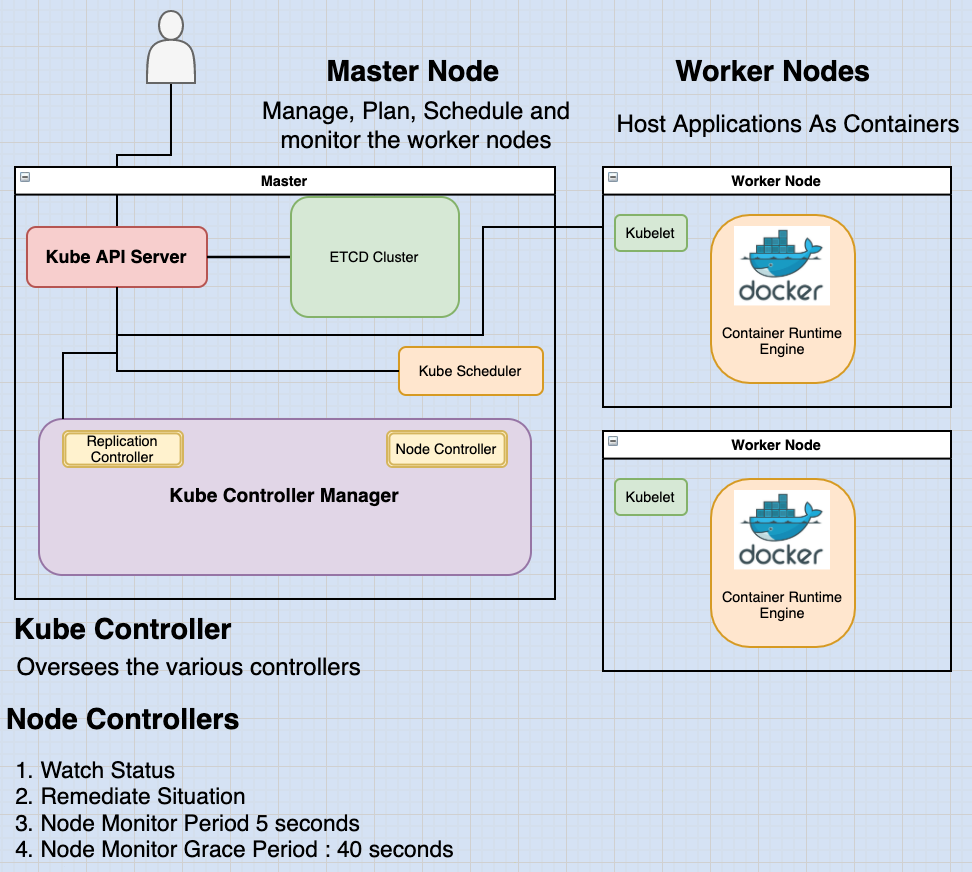

First Example of a Controller: The Node Controller

The node controller is responsible for monitoring the status of the nodes and taking necessary actions to keep the application running.

It does this through the kube-api server.

The node controller checks the status of the nodes every 5 seconds. In this way, it monitors the health of the nodes.

If a node controller stops receiving heartbeat from a node, it is marked as unreachable but it waits for 40 seconds before marking it unreachable.

After a node is marked unreachable it gives it five minutes to come back up, if it doesn’t, it removes the PODs assigned to that node and provisions them on the healthy ones.

Second Example of a Controller: The Replication Controller

The Replication Controller is responsible for monitoring the status of replica sets and ensuring that the desired number of PODs are available at all times within the set.

If a POD dies, the replication controller creates another one.

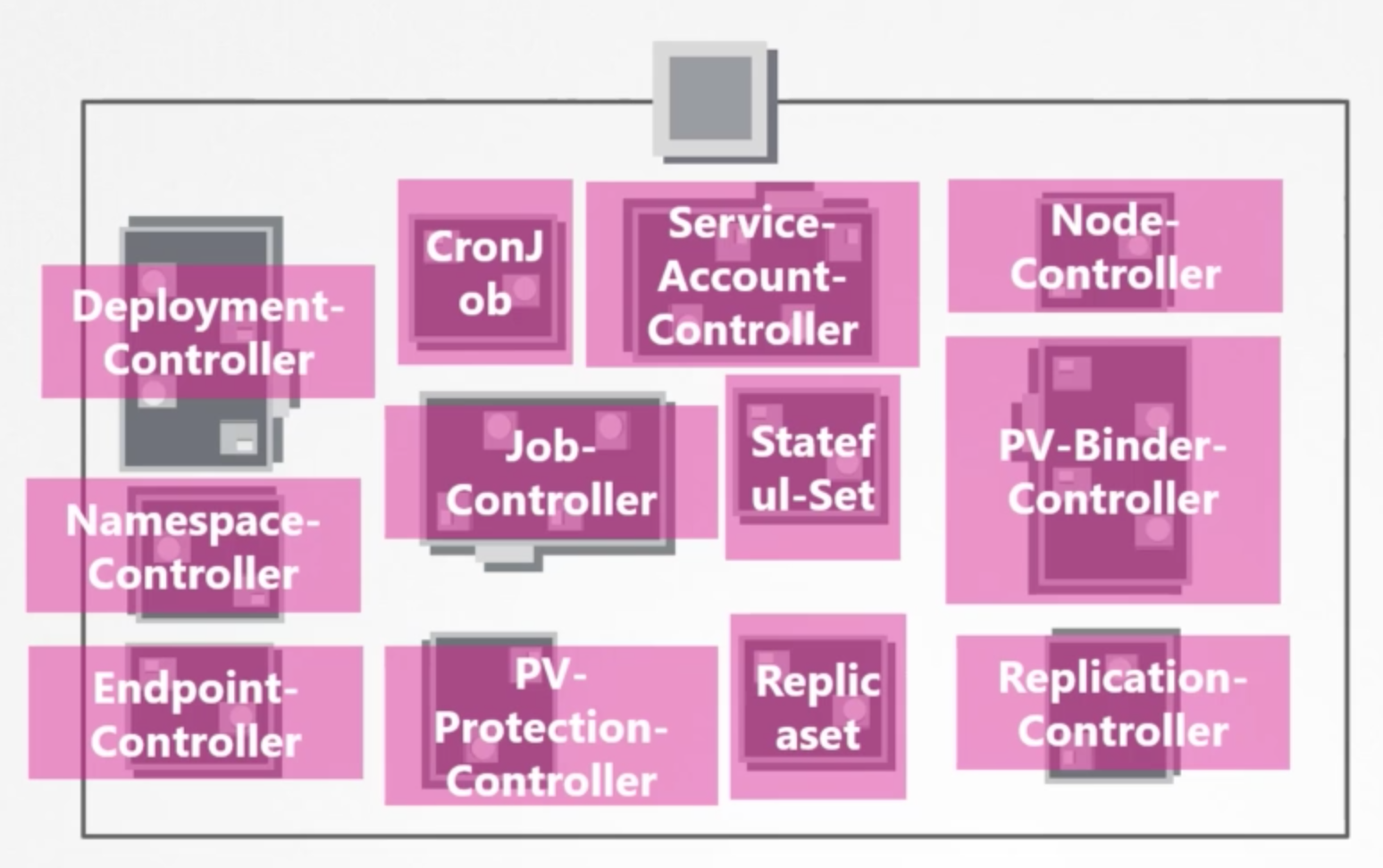

Now, those were just two examples of controllers.

There are many more such controllers available within kubernetes.

Whatever concepts we have discussed so far in kubernetes such as:

- Deployments

- Services

- Namespaces

- Persistent volumes

Whatever intelligence is built into these constructs is implemented through these various controllers.

As you can imagine, these controllers can be though of the "brain" behind a lot of things in kubernetes.

How do you see these controllers and where are they located in your cluster?

They're all packaged into a single process known as kubernetes controller manager.

When you install the kubernetes controller manager the different controllers get installed as well.

So how do you install and view the kubernetes Controller manager?

Download the kube-controller-manager from the kubernetes release page. Extract it and run it as a service:

wget https://storage.googleapis.com/kubernetes-release/release/v1.13.0/bin/linux/amd64/kube-controller-managerkube-controller-manager.serviceWhen you run it, you can see there are a list of options provided. This is where you may provide additional options to customize your controller.

Remember, some of the default settings for node controller we discussed earlier such as the node monitor period, the grace period and the eviction timeout. These go in here as options.

--node-monitor-period=5s

--node-monitor-grace-period=40s

--pod-eviction-timeout=5m0sThere is an additional option called controllers that you can use to specify which controllers to enable.

--controllersBy default all of them are enabled but you can choose to enable a select few.

So in case any of your controllers don't seem to work or exist, this would be a good starting point to look at.

How do you view the Kube-controller-manager server options?

It depends on how you set up your cluster.

If you set it up with kubeadm tool, kubeadm deploys the kube-controller-manager as a pod in the kube-system namespace on the master node.

kubectl get pods -n kube-systemYou can see the options within the pod definition file located at:

cat /etc/kubernetes/manifests/kube-controller-manager.yamlIn a non-kubeadm setup, you can inspect the options by viewing the kube-controller-manager service located at the services directory.

cat /etc/kubernetes/system/kube-controller-manager.serviceYou can also see the running process and the effective options by listing the process on the master node and searching for kube-controller-manager:

ps -aux | grep kube-controller-managerThanks for following along, if you want to learn more about kubernetes, check out my other posts.